时间:2023年12月20日(周三上午)10:00-11:30

地点:理工楼211

Abstract:

A rising number of foundational models are being developed at an ever faster speed. Fair evaluations are more challenging due to their broad and dynamically growing capabilities, which cannot be reflected by standard examination-based benchmarks such as MMLU. To address this, evaluation with open-ended questions which do not come with golden answers, provides a potentially better solution. I’ll give an overview of challenges and introduce our work on reference-free evaluation -- firstly on how to de-bias the self-enhancement in LLM-based evaluation of model-human alignment; and secondly, how to measure the fine-grained hallucinations in vision-language models with structured knowledge. Lastly, I’ll cover our most recent efforts on automatic hallucination mitigation with structured AI feedback.

Relevant papers are available at: https://arxiv.org/abs/2311.01477 and https://arxiv.org/abs/2307.02762

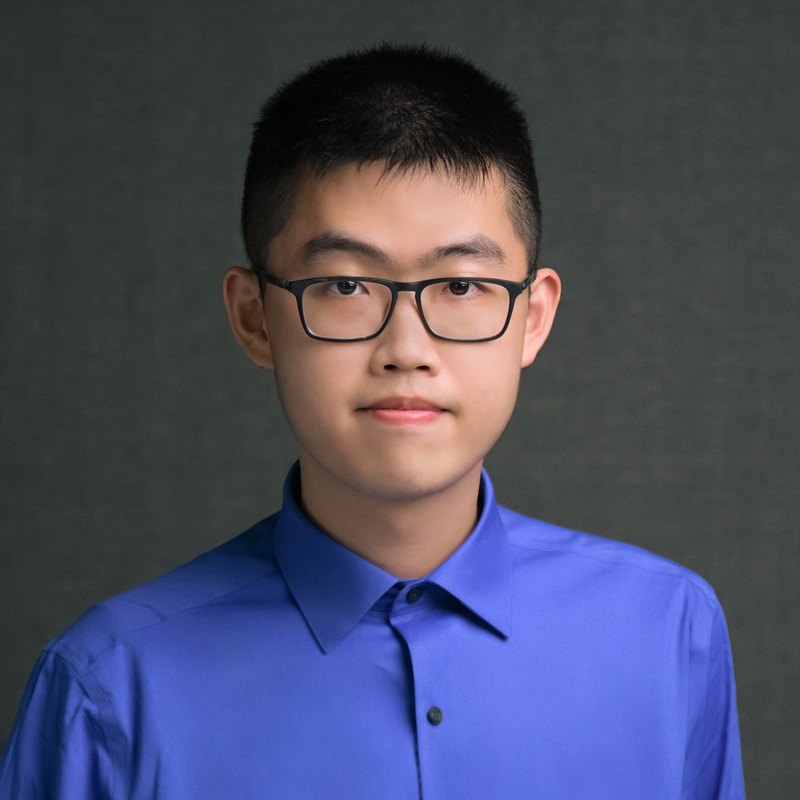

Biography:

Xinya Du is a tenure-track assistant professor at UT Dallas Computer Science Department. He worked as a Postdoctoral Research Associate at the University of Illinois (UIUC). He earned a CS Ph.D. degree from Cornell University and graduated with a bachelor's degree from Shanghai Jiao Tong University (SJTU). He has also worked at Microsoft Research, Google Research, and Allen Institute AI (AI2). His research is on natural language processing, deep learning, and Artificial Intelligence, with the goal of building intelligent machines with both human-aligned and faithful knowledge & reasoning capabilities.

His work has been published in leading NLP conferences (ACL, EMNLP, NAACL). His work was included in the Most Influential ACL Papers by Paper Digest and has been covered by major media like New Scientist. He was named a Spotlight Rising Star in Data Science by the University of Chicago and was selected for the New Faculty Highlights program by AAAI.